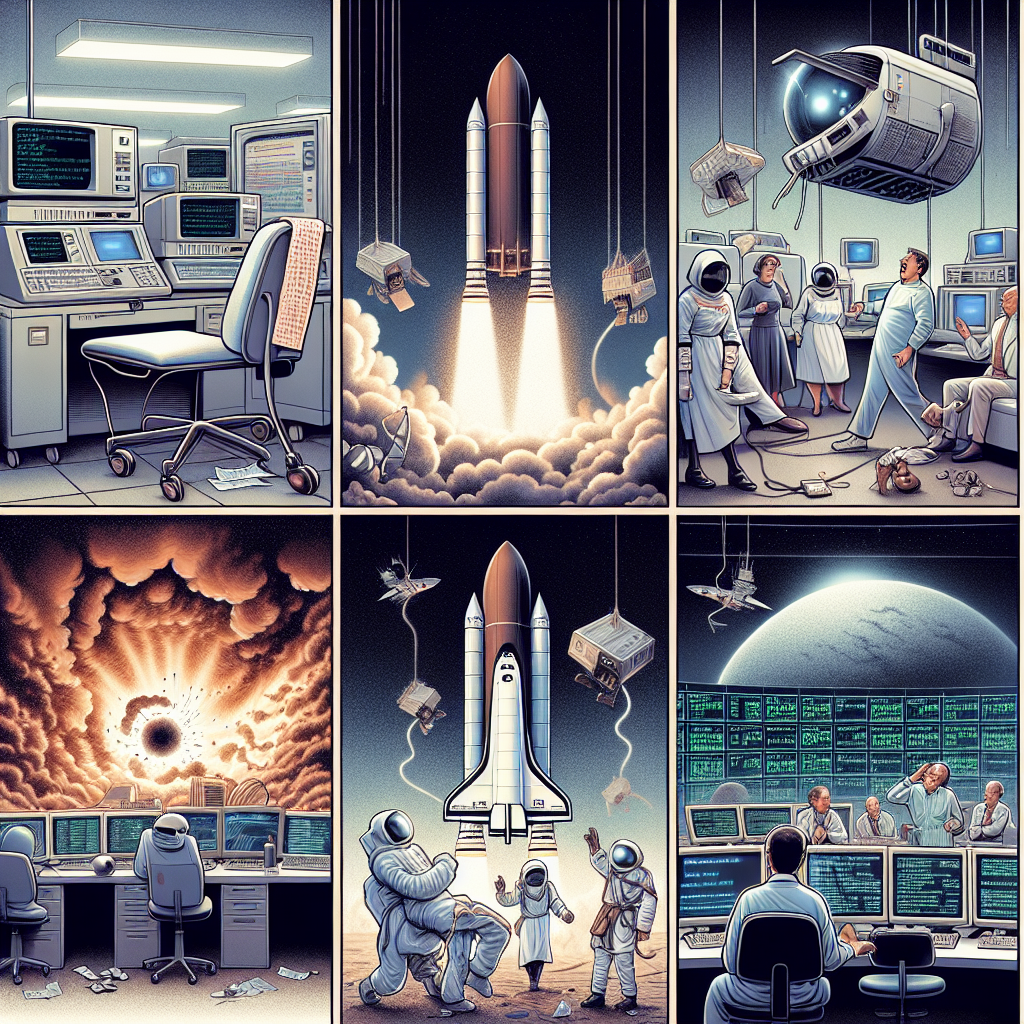

Programming is an art form as much as it is a science, and like any craft, it’s prone to mistakes. Some errors are small and manageable, but others? Well, they can have catastrophic consequences. Let’s dive into five of the worst programming mistakes in history and see what lessons they can teach us about avoiding pitfalls in our own coding journeys.

1. The Therac-25 Disaster: Life-Threatening Software Bugs

The Mistake: In the 1980s, the Therac-25 radiation therapy machine delivered lethal doses of radiation to six patients due to a software bug. The machine’s operator interface allowed technicians to accidentally input incorrect commands, while the safety mechanisms—both in hardware and software—failed to prevent it. Programmers had left out necessary hardware interlocks, trusting the software alone to manage safety.

The Impact: Patients who were undergoing treatment for cancer received fatal overdoses of radiation. This mistake had deadly consequences, highlighting the dangers of untested and poorly designed software in critical systems.

Lesson: In safety-critical applications like healthcare or aviation, thorough testing and multiple levels of fail-safes are essential. Don’t rely on software alone for crucial safety systems—hardware interlocks, redundancy, and thorough testing (especially real-world testing) are necessary.

2. The Y2K Bug: A Problem 40 Years in the Making

The Mistake: Programmers in the early days of computing often represented the year in two digits (e.g., “70” for 1970), assuming their programs would be long outdated by the year 2000. This short-sighted decision caused chaos as the year 2000 approached, raising fears that systems would interpret the new millennium as 1900, potentially causing banking systems, power grids, and everything in between to malfunction.

The Impact: The Y2K bug spurred a global scramble to patch millions of lines of code across industries. While the crisis was largely averted through a coordinated and expensive effort, it exposed the dangers of technical debt—decisions made to save space or time in the short term can have long-term, expensive consequences.

Lesson: Always design for the long term. Programmers must think about how their code will hold up not just in the next few years, but in the next few decades. Future-proofing your code, especially when it deals with date, time, or similar constants, is crucial.

3. The Mars Climate Orbiter Crash: Unit Conversion Gone Wrong

The Mistake: In 1999, NASA’s Mars Climate Orbiter was lost because of a simple but catastrophic error—a failure to convert units between metric (used by most of the world) and imperial (used by the U.S.). The spacecraft’s navigation team was working in metric, while a contractor had programmed the software in imperial units. This led to incorrect navigation data being sent to the spacecraft, causing it to enter Mars’ atmosphere at a dangerous angle and ultimately burn up.

The Impact: NASA lost a $125 million spacecraft due to what could be seen as a simple mistake in unit conversion. This highlighted how small errors in communication can lead to significant financial and scientific losses.

Lesson: Clear communication and strict adherence to coding standards are key, especially when collaborating with different teams or third-party contractors. Be explicit about units, formats, and standards to avoid misinterpretation, and always double-check assumptions.

4. The Knight Capital Trading Glitch: A $440 Million Mistake in 45 Minutes

The Mistake: In 2012, Knight Capital, a financial services firm, released an update to their trading software that had a fatal flaw. A forgotten testing flag caused the system to trigger an old, dormant feature in their code, which began buying and selling massive amounts of stocks automatically. Within 45 minutes, the firm had accumulated $7 billion in erroneous trades, losing $440 million and essentially bankrupting itself.

The Impact: Knight Capital’s mistake wiped out their company, leading to a buyout and ending their legacy as one of Wall Street’s top firms. The error exposed the dangers of deploying untested or poorly tested software in live environments.

Lesson: Thorough testing in real-world conditions is critical. Unit tests and code reviews are great, but you must ensure that old, legacy code doesn’t interfere with new features. Staging environments and feature flags should be rigorously tested to avoid catastrophic production errors.

5. The Ariane 5 Rocket Explosion: A Simple Overflow Error

The Mistake: In 1996, the European Space Agency’s Ariane 5 rocket exploded just 37 seconds after liftoff, all because of a software bug. The error stemmed from a data conversion issue—an attempt to fit a 64-bit floating point number into a 16-bit integer variable. This led to an overflow, which the rocket’s guidance system interpreted as invalid, causing the system to shut down mid-flight.

The Impact: The explosion resulted in a $370 million loss for the European Space Agency, all due to an oversight in how data types were handled. This mistake underscored the importance of understanding the limitations of your software and its underlying architecture.

Lesson: Pay close attention to data types and how they interact in your code. Overflow and memory errors can easily slip by if you’re not careful, especially in high-performance or embedded systems where every byte of memory matters. Always account for edge cases in your design and test them rigorously.

Conclusion: Avoiding Programming Pitfalls

Each of these mistakes may seem like a result of a “small” error—unit conversions, forgotten flags, memory overflows—but they had massive consequences. The lesson here is clear: small errors in programming can lead to catastrophic outcomes when deployed in critical systems or large-scale applications.

To avoid these mistakes, remember the following principles:

- Test thoroughly, especially in real-world conditions.

- Communicate clearly across teams and ensure everyone is using the same standards.

- Never cut corners when it comes to safety-critical systems.

- Future-proof your code as much as possible.

- Always account for edge cases, particularly in data handling and memory management.

Programming is complex, but by learning from the past, we can write better, safer, and more reliable code in the future.

Leave a Reply